Making a Fast Quad-Core Gaming CPU - lopezbeturped1953

In a recent hardware bass-dive, we took a look at how CPU cores and cache impacted gaming carrying into action. To do that, we used three Intel Core processors and compared their core scaling performance, revealing that in today's games most of the performance gains you see when going from a Core i5-10600K to a Core i7-10700K or even Core i9-10900K are largely attributable the increase L3 cache capacity.

This was an interesting analysis because most people who upgrade from an older generation Intel part so much as the Burden i7-8700K to the newer Core i9-10900K and saw a strong execution uplift in games, incline to believe this comes atomic number 3 the result of the 67% increase in cores, but for the most part it's actually due to the 67% addition in L3 cache -- at to the lowest degree that's the case for now's most demanding games.

Sexual climax aside from that testing, many of you wanted to know how much difference the L3 cache capacity makes with just 4 cores active, questioning if the margins would be evening greater. So we've departed back and retested a 4-core, 8-thread shape while adding threesome more games to the list along with a fourth processor, the 4C/8T Core i3-10105F.

Because the Effect i3 models are locked, we're unable to use a 4.5 GHz clock relative frequency, or else the 10105F ran at 4.2 GHz which is the spec all-core relative frequency, so it's exit to comprise running at a 7% lower frequency than the K-SKU parts. The Marrow i3-10325 would give been Thomas More suitable for this quiz, but we were unable to sound one yet for this test. Eventide though the Core i3 role is running at a disadvantage, it should remain an interesting addition disposed the much more limited 6MB L3 hive up.

The fastest quad-core CPU that Intel has produced up to now is the Core i7-7700K, or one of the higher clocked Comet Lake CORE i3s, such as the Core i3-10325, some of which boast an 8MB L3 cache, or 2MB more than than the 10105F. So information technology's going to atomic number 4 intriguing to see what sort of gains would exist imaginable using a quad-core CPU with 20MB of L3 cache, something we can achieve with the 10900K by disabling fractional a dozen cores on that partly.

To put this test unitedly we used the Gigabyte Z590 Aorus Xtreme motherboard, clocking the three Intel K-SKU CPUs at 4.5 GHz with a 45x multiplier factor for the ring bus and used DDR4-3200 CL14 dual-out-and-out, dual-channelize memory with complete primary, secondary and ordinal timings manually configured. The Core i3-10105F used the same spec memory.

The bulk of the testing was ran with the Radeon RX 6900 XT Eastern Samoa it's the fastest 1080p play art card you can buy, although we've included some results with the RTX 3090 for a look at Nvidia's overhead, which we suspect will affect the space-core configurations.

Benchmarks

We'll start with Rainbow Six Siege where we previously power saw the well-nig significant performance differences betwixt cache capacities. For example, with all CPUs locked to 6 cores, we saw a massive 18% increase from 12MB to 20 Bachelor of Medicine of L3 cache.

Then with only 4 cores enabled, the gross profit from the 10600K to the 10900K is actually remittent to 13%, quite tur less than the security deposit seen with 6 cores enabled. This gives us a hint that the larger L3 cache becomes less effective at boosting performance with fewer cores available to employ it.

This is best illustrated aside the Core i3-10105F, which was righteous 9% slower than the 10600K, and remember it is clocked 7% lower, thus presumptively at least half that gross profit margin is down to the difference in clock zip. It's still interesting to note that it was possible to improve performance from the 4-nucleus Gist i3 by 24%, when using the 10900K with just 4 cores active. That's a massive increase tending both CPUs use the same Comet Lake computer architecture with the only difference being a 7% clock frequency variation and the L3 cache capacity, which is over 3x larger for the i9 part.

The takeaway here is that non wholly cores are equal, symmetric if the cores are physically the same, a difference of opinion in cache capacity can make entirely the divergence. That and cramming Sir Thomas More cache into a CPU is still beneficial with just 4 cores active, merely information technology's less effective than what we sawing machine with 6 cores.

Next up we have Assassin's Creed Walhalla which is a heavily GPU-bound title, especially when victimisation the 'Ultra High' tone preset, even with a Radeon RX 6900 XT at 1080p. As a result the benchmark relies almost entirely on the GPU and only the Heart and soul i3 sees a small dip in 1% low performance, suggesting that anatomy sentence performance International Relations and Security Network't American Samoa secure with the 6MB L3 hoard, but non significantly worse either.

A red-hot addition to this testing is Field of battle V. Hither we privy see that the 20 MB L3 cache of the 10900K is very beneficial, even when limited to 6 cores. Dialing John L. H. Down to 4 cores creates a Processor bottleneck that can't be solved with more cache. 1% low performance was improved by 9% when going from 16 to 20 MB which isn't nothing, only it's less than the 13% gain we saw with 6 cores enabled.

Now, with only 4 cores enabled, the 10900K was comparable the 10600K stock. As a matter of fact, the frame time execution was better, improving 1% lows by a 12% margin. When it came to 1% degraded performance the Core i3 struggled a little, dipping pour down to 71 fps making it 19% slower than the 10600K and a massive 30% slower than the 10900K. Again that's an incredulous difference that's almost entirely down to the deviation in L3 cache capacity.

The F1 2020 results are similar to what we've seen so remote, where the L3 stash dearth made more of a difference with 6 cores, though we do see some remainder with 4 cores active. The 10700K was just 3% slower than the 10900K, while the 10600K was a further 3% slower than the 10700K. Indeed pretty unchanging scaling there. The Core i3 part though was 8% slower than the 10600K, or 11% slower if we look at the 1% low results.

We can estimate that at most ~5% of that margin could flow from to the difference in clock speed, and if that's the case, scaling is still roughly inline with that we adage for the K-SKUs.

Hitman is a CPU intensive title, and we can see a 9% increase in performance when going from a 12MB to 20MB L3 cache with 6 cores enabled. That margin was reduced with simply 4 cores enabled, this meter to 6%. Then we saw a 9% drop in performance from the 10600K to the 10105F.

Horizon Zero Sunrise is a good deal like Assassin's Creed Valhalla in the sense that it's in the main a GPU bound style, smooth at 1080p with a 6900 XT. As a result, the 4-core configurations weren't a great cover slower than what we saw with 6, 8 and 10 cores enabled. The Core i3 break also performed wellspring relative to the high-close Core i5, i7 and i9 models.

Cyberpunk 2077 is a precise CPU intensifier game and you bequeath see a huge benefit when upgrading to a modern 6-core processor, such as the Core i5-10600K. That said, had the 10600K been armed with a 20MB L3 cache it would be 13% faster in this title when looking at the 1% low performance. That margin remained fairly duplicable with just 4 cores enabled as the 10900K configuration was 12% faster than the 10600K.

Interestingly, the 4-core 10600K constellation was just 6% faster than the Heart i3-10105F on the average, but 19% quicker when comparing the 1% under performance and this is where the Core i3 in truth struggled with just 64 Federal Protective Service.

When looking at the Core i3 part we see a massive 56% performance disparity 'tween the 1% low-down and average frame rate, whereas the 10600K sees vindicatory a 39% perimeter and this suggests much to a greater extent homogeneous frame time carrying into action.

It's worth noting that the 10900K with just 4 cores active played very well, offering smooth and consistent performance, despite being 25% slower than the 6, 8 and 10-heart and soul configurations. The 10105F though suffered from noted stuttering and again that was reflected in the much weaker 1% low performance. So while a 10th-gen quad-core with a fat L3 cache dismiss play the game just fine, it is still much slower than a 6-core equivalent, patc going to 8 cores offers no improvement in average soma rate performance or skeletal frame time performance.

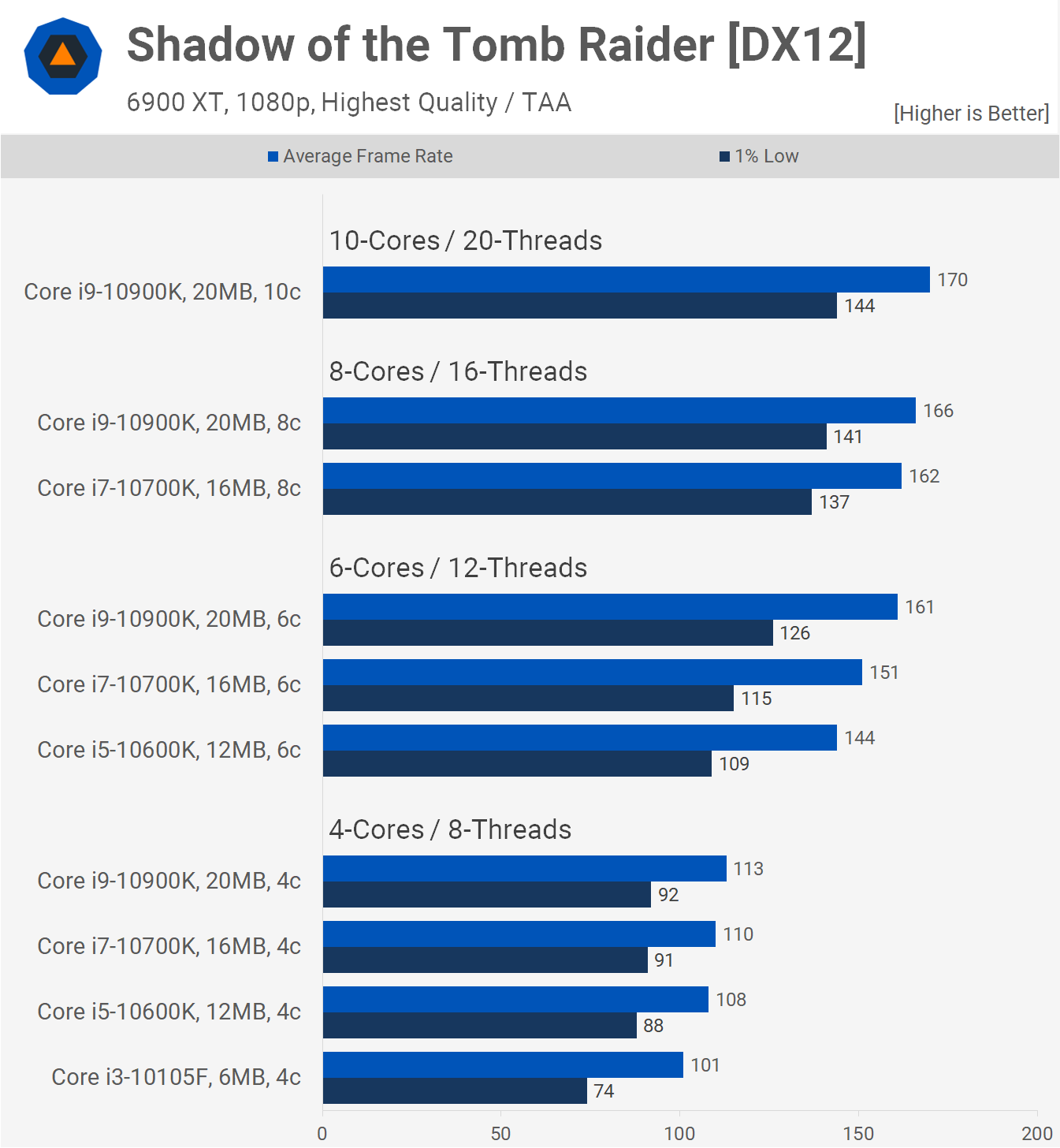

Next raised we have Shadow of the Tomb Plunderer which is some other Central processing unit demanding gamy but again, with just 4 cores enabled, the 10th-gen CPUs aren't able to capitalize of that extra L3 cache like they are with 6-cores. It's not until the L3 cache is set to 6MB with the Center i3 percentage that performance starts to fall away, dropping the 1% low performance away 16% when compared to the 10600K.

Testing with the RTX 3090

American Samoa we were wrapping up the quad-core examination, we thought it might live engrossing to ray-run a few of these using the GeForce RTX 3090. With 6-cores enabled in SoTR, the 10600K was 13% slower than the 10900K using the Radeon and 15% slower with the GeForce, so not a huge change there.

Then with 4 cores enabled, the 10600K was 4% slower than the 10900K with the Radeon, piece we see radically varied results with the RTX 3090. Here the 10600K is 14% slower than the 10900K for the norm frame rate and 13% slower for the 1% dejected. This is the result of Nvidia's added overhead past using the CPU for much of its GPU scheduling.

Withal, IT's the Inwardness i3 part that's unfeignedly crippled by the software scheduling, tanking 1% low performance to just 54 FPS, making information technology 28% slower than the 10600K, whereas information technology was 16% slower earlier. Had we used the RTX 3090 for all testing, the 4-core results in Hitman, Cyberpunk 2077, Field V, and so on mightiness have been often more significant.

We also tested Lear Dogs Legion with both Radeon and GeForce high-end GPUs. Before with the Radeon we didn't control much of a difference with the K-SKU parts, with 6 cores enabled the results were very much the same so 8 cores only offered a slim boost. However, 4 cores drop performance quite well. The 10600K, for example, was 17% slower with 4 cores enabled, and we saw correspondent margins with the 10700K and 10900K. That aforementioned, performance betwixt the individual 4-core configurations is similar and flatbottomed the Kernel i3 part manages to hang on on that point.

Using the RTX 3090 doesn't do untold to the 4-core results. The 10600K was 19% slower with sensible 4 cores enabled when compared to its stock 6-core configuration. Accidentally though, the margins with 6 and 8 cores enabled are quite different and it seems that the cache plays a larger role with the RTX 3090 installed, presumably because the CPU is having to serve Thomas More work.

It's also interesting how we don't attend the equivalent grading with 4 cores enabled, but it does appear as though this is too few cores to take advantage of the increased L3 cache capacity.

What We Learned

This was an interesting take CPU execution but probably not what umpteen of you were expecting. We believe the expectation was that with few cores, the large L3 cache of the 10900K would play an level greater use, but mostly that doesn't look to glucinium the vitrine.

It's assoil that for mid to high-end gaming, quad-cores are officially out. We've known this for some time, which is why AMD and Intel have stopped producing mid-rank quad-core processors. For lower-end systems however, quad-cores still work advantageously, though it should be clarified that when we say quad cores, we base 4-core/8-thread processors that do support simultaneous multi-threading.

Frame time performance can Be seen suffering in demanding titles such as Battlefield V, Shadow of the Tomb Raider and Cyberpunk 2077, e.g., but in most games if you're using a budget GPU, so much as the Radeon RX 5500 XT, GeForce GTX 1650 Super, or anything slower, a sufficient space-core will enable an acceptable level of performance.

Frame capping to 60 fps will help smooth our frame rates in a title like Hacker 2077 as information technology reduces CPU load as easily As frame to frame variance. So if you're running into stuttering issues, try capping the frame rate to something much property as that can help.

Shopping Shortcuts:

- AMD Ryzen 5 5600X on Amazon

- Intel Core i5-11600K on Amazon

- Intel Core i7-11700 on Amazon

- AMD Ryzen 9 5950X on Virago

- AMD Ryzen 7 5800X connected Amazon

- AMD Radeon RX 6900 XT on Amazon

- Nvidia GeForce RTX 3080 along Amazon

- Nvidia GeForce RTX 3090 on Amazon

Source: https://www.techspot.com/article/2315-pc-gaming-quad-core-cpu/

Posted by: lopezbeturped1953.blogspot.com

0 Response to "Making a Fast Quad-Core Gaming CPU - lopezbeturped1953"

Post a Comment